Contents:

However, when perceptions are applied, we can get a better understanding of the xor dataset. XOR can be represented by a two-layer neural network. Perceptrons are networks of linear separable functions that can be used to determine linear function types. Despite this, it was discovered in 1969 that perceptrons are incapable of learning the XOR function.

The exclusive-or function is not linearly separable, which means that it cannot be represented by a single line in a two-dimensional space. A single neural network can only represent linear functions, which means that it is not able to learn the exclusive-or function. Tessellation surface formed by πt-neuron model and proposed model for two-dimensional input. As we can see, the Perceptron predicted the correct output for logical OR. Similarly, we can train our Perceptron to predict for AND and XOR operators. But there is a catch while the Perceptron learns the correct mapping for AND and OR.

However, this model also has a similar issue in training for higher-order inputs. A neural network is a machine learning algorithm that is used to model complex patterns in data. One of the most popular applications of neural networks is to solve the XOR problem. The XOR problem is a classic example of a problem that is not linearly separable.

It is because of the input dimension-dependent adaptable scaling factor (given in equation ). The effect of the scaling factor is already discussed in the previous section (as depicted in Figure 2). We have seen that a larger scaling factor supports BP and results from proper convergence in the case of higher dimensional input. The significance of scaling has already been demonstrated in Figure 2. Figure 4 is the demonstration of the optimal value of scaling factor ‘b’. XOR is a classical problem in the artificial neural network .

multi-layer-perceptron

Forecasting involving the time series has been performed using the multiplicative neuron models [24–26]. Yildirim et al. have proposed a threshold single multiplicative neuron model for time series prediction . They utilized a threshold value and used the particle swarm optimization and harmony search algorithm to obtain the optimum weight, bias, and threshold values. In , Yolcu et al. have used autoregressive coefficients to predict the weights and biases for time series modeling. A recurrent multiplicative neuron model was presented in for forecasting time series.

And this was the only purpose of coding xor neural network from scratch. Define the optimization algorithm to update the parameters. We are using a more simple optimization technique here.

Some machine learning algorithms like neural networks are already a black box, we enter input in them and expect magic to happen. Still, it is important to understand what is happening behind the scenes in a neural network. Coding a simple neural network from scratch acts as a Proof of Concept in this regard and further strengthens our understanding of neural networks. Squared Error LossSince, there may be many weights contributing to this error, we take the partial derivative, to find the minimum error, with respect to each weight at a time. The change in weights are different for the output layer weights (W31 & W32) and different for the hidden layer weights . Backpropagation is a way to update the weights and biases of a model starting from the output layer all the way to the beginning.

THE LEARNING ALGORITHM

However, neural networks are a type of algorithm that’s capable of learning. For learning to happen, we need to train our model with sample input/output pairs, such learning is called supervised learning. Supervised learning approach has given amazing result in deep learning when applied to diverse tasks like face recognition, object identification, NLP tasks. Most of the practically applied deep learning models in tasks such as robotics, automotive etc are based on supervised learning approach only.

Neuron Bursts Can Mimic a Famous AI Learning Strategy – WIRED

Neuron Bursts Can Mimic a Famous AI Learning Strategy.

Posted: Sun, 31 Oct 2021 07:00:00 GMT [source]

Therefore, our proposed model has overcome the limitations of the previous πt-neuron model. Table 5 provide values of the threshold obtained by both the pt-neuron model and proposed models. In experiment #2 and experiment #3, the pt-neuron model has predicted threshold values beyond the range of inputs, i.e., .

Last Linear Transformation in Representational Space

After compiling the model, it’s time to fit the training data with an epoch value of 1000. After training the model, we will calculate the accuracy score and print the predicted output on the test data. The learning rate determines how much weight and bias will be changed after every iteration so that the loss will be minimized, and we have set it to 0.1. We have defined the getORdata function for fetching inputs and outputs. Similarly, we can define getANDdata and getXORdata functions using the same set of inputs. We start with random synaptic weights, which almost always leads to incorrect outputs.

Shiho Kim supervised the study and was responsible for project administration and and funding acquisition. All authors have read and agreed to the published version of the paper. For correspondence, any of the authors can be addressed (Ashutosh Mishra; ; Jaekwang Cha; , and Shiho Kim; ). Scaling Factor and loss Obtained by Πt-Neuron and Proposed Models with Increasing Input Dimension of N-bit Parity Problem.

Further, we have compared the training performance of the πt-neuron model with our proposed model for the 10-bit parity problem. Training results of both models have been represented in Figure 7 (by plotting binary cross-entropy loss versus the number of iterations). A XOR neural network is a type of artificial neural network that is used for solving the exclusive-or problem. The exclusive-or problem is a two-input, two-output problem that is not linearly separable. The XOR neural network is composed of two input nodes, two hidden nodes, and one output node. The hidden nodes are connected to the input nodes with weights that are learned during training.

Even with pretty good hyperparameters, I observed that the learned XOR model is trapped in a local minimum about 15% of the time. It works even better when the input is shifted to have zero mean. John, “Time series prediction with single multiplicative neuron model,” Applied Soft Computing, vol. H. Negm, “Modeling and design of high-frequency structures using artificial neural networks and space mapping,” Advances in Imaging and Electron Physics, , Elsevier, vol.

Modelling the OR part

These system were able to learn formal mathematical rules to solve problem and were deemed intelligent systems. To solve this problem, active research started in mimicking human mind and in 1958 once such popular learning network called “Perceptron” was proposed by Frank Rosenblatt. Perceptrons got a lot of attention at that time and later on many variations and extensions of perceptrons appeared with time. But, not everyone believed in the potential of Perceptrons, there were people who believed that true AI is rule based and perceptron is not a rule based.

The main principle behind it is that each parameter changes in proportion to how much it affects the network’s output. Though there are many kinds of activation functions, we’ll be using a simple linear activation function for our perceptron. The linear activation function has no effect on its input and outputs it as is. These parameters are what we update when we talk about “training” a model.

In this case, no one hyperplane can separate the output classes for this function definition. Murase, “Single-layered complex-valued neural network for real-valued classification problems,” Neurocomputing, vol. Effect of scaling factor on the gradient of the sigmoid function.

This means that each data point can only belong to one class, and no two data points can be in the same class. This type of dataset is often used in machine learning and data mining applications, where it is important to be able to distinguish between different classes of data. The XOR function cannot be learned by a single neuron.

A dynamic AES cryptosystem based on memristive neural network … – Nature.com

A dynamic AES cryptosystem based on memristive neural network ….

Posted: Thu, 28 Jul 2022 07:00:00 GMT [source]

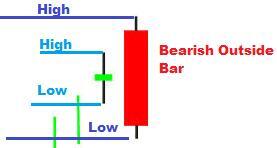

Hence we cannot directly solve XOR problem with two neurons. Following images show no matter how many ways we draw a line in 2D space we cannot differentiate one side’s output with the other. For example for the first one and both inputs makes XOR to give 1. But for the input the output is 0 but we cannot make it separated and unfortunately they are falling in the same side. The first representing logic AND and the other logic OR.

Selection of a loss and cost functions depends on the kind of output we are targeting. For classification we use cross entropy cost function. In Keras we have binary cross entropy cost funtion for binary classification and categorical cross entropy function for multi class classification. Based on the problem at hand we expect different kinds of output e.g. for cat recognition task we expect system to output Yes or No for cat or not cat respectively.

The sample code from this post can be found here.

But before https://forexhero.info/ XOR problem with two neurons I want to discuss on linearly separability. A problem is linearly separable if only one hyperplane can make the decision boundary. Neural networks are complex to code compared to machine learning models. If we compile the whole code of a single-layer perceptron, it will exceed 100 lines. To reduce the efforts and increase the efficiency of code, we will take the help of Keras, an open-source python library built on top of TensorFlow.

- There are various schemes for random initialization of weights.

- The implemented neural network evaluates XOR for two noisy inputs, A and B.

- Therefore, many researchers tried to find a suitable way out to solve the XOR problem [4–15].

This plot code is a bit more complex than the previous code samples but gives an extremely helpful insight into the workings of the neural network decision process for XOR. Let the outer layer weights be wo while the hidden layer weights be wh. Keep in mind that the XOR function can’t be solved by a simple Perceptron. We need a Neural Network with as least one hidden layer. In this article you saw how such a Neural Network could look like. If you want dive deeper into Deep Learning and Neural Networks, have a look at our Recommendations.

Drifting assemblies for persistent memory: Neuron transitions and … – pnas.org

Drifting assemblies for persistent memory: Neuron transitions and ….

Posted: Mon, 28 Feb 2022 23:36:29 GMT [source]

The output node is connected to the hidden nodes with weights that are also learned during training. The XOR neural network is trained using the backpropagation algorithm. This blog is intended to familiarize you with the crux of neural networks and show how neurons work. The choice of parameters like the number of layers, neurons per layer, activation function, loss function, optimization algorithm, and epochs can be a game changer. And with the support of python libraries like TensorFlow, Keras, and PyTorch, deciding these parameters becomes easier and can be done in a few lines of code. Stay with us and follow up on the next blogs for more content on neural networks.